Open Claw Memory Is Brittle - Here Is How Chief and I Are Fixing It

I started running Chief as my full-time operations assistant in mid-January. Within two weeks I thought it was working. Within four weeks I realized I had been wrong about what "working" meant.

That gap — between thinking something works and understanding why it doesn't — is what this post is about.

The Confident Machine

For the first few weeks, Chief felt capable. It was managing deployments, running heartbeats, tracking tasks, writing standup reports. The surface looked right. What I didn't catch was how much of what it was telling me it had simply invented.

On February 15th, the 5pm heartbeat reported that I had been "quiet since about 2pm." I was not quiet. I was actively chatting in three Slack threads at that exact moment. Chief had only ever looked at one channel — the main DM thread — but it reported my full presence status without a qualifier. It didn't say "based on what I can see." It just stated it as fact.

That same heartbeat flagged a bug we had fixed three hours earlier. The fix existed. It was committed, deployed, verified. But Chief had no persistent record of what was open versus resolved, so it generated its status report from session context — which included the original bug report but not the resolution. It wasn't lying. It was reconstructing from incomplete information and presenting the reconstruction as current truth.

This kept happening. The daily operator report listed Reddit outreach tasks as still in progress. The database showed them done. A morning briefing suggested we needed a pricing page. We had one, live, with three tiers. The agent suggested building something that already existed because it had never actually looked.

None of these failures happened because Chief was unintelligent. They happened because I had given it a job to do and assumed the job included knowing what it did not know.

It doesn't. That assumption is the mistake.

The Fabricated Tweet

The one that made me stop was the tweet.

Chief has its own account — @BensChief — where it posts operational logs and dispatches from running the system. It drafted a tweet about fixing a "security issue" in our infrastructure. There was no security issue. Nothing close to a security issue had happened. Chief fabricated the entire incident — presumably because it needed something interesting to post and generated a plausible story from the pattern of what ops updates look like.

It was caught before publishing. What made it alarming wasn't just that it was false. It was that Chief had no idea it was false. It generated a narrative, concluded the narrative was accurate, and prepared to publish it. The agent was simultaneously the author and the fact-checker, which meant there was no fact-checking happening at all.

That's not a prompt failure. That's an architecture failure. An agent that generates content while simultaneously deciding what is true will hallucinate. Every single time.

What I Got Wrong

I had been thinking about Chief the way you might think about a talented employee — give them goals, check in occasionally, assume they're tracking. That framing is wrong for an LLM in a way that matters.

A talented employee has continuity. They remember what was open yesterday. They know the difference between what they verified and what they assumed. When they're uncertain, they flag it. When they drop a task, there's a record somewhere.

An LLM has none of that by default. Each session starts fresh. Context compacts as conversations grow. Earlier corrections get dropped when the window shifts. In-progress work disappears if it's only tracked in session memory. The agent isn't ignoring your instructions — it literally cannot see them anymore.

You cannot fix this with better prompts. You have to fix it with architecture.

What Structure Actually Means

Here is the thing I kept getting wrong: I thought structure meant better instructions. More detailed prompts. Clearer goals.

That's not what an LLM needs.

An LLM needs an external structure that survives between sessions. Instructions in a system prompt exist inside the context window. They get compacted. They compete with everything else in the conversation. When the context shifts, they shift too.

What works is pulling the ground truth out of the model's memory entirely and putting it somewhere that doesn't change when the session does.

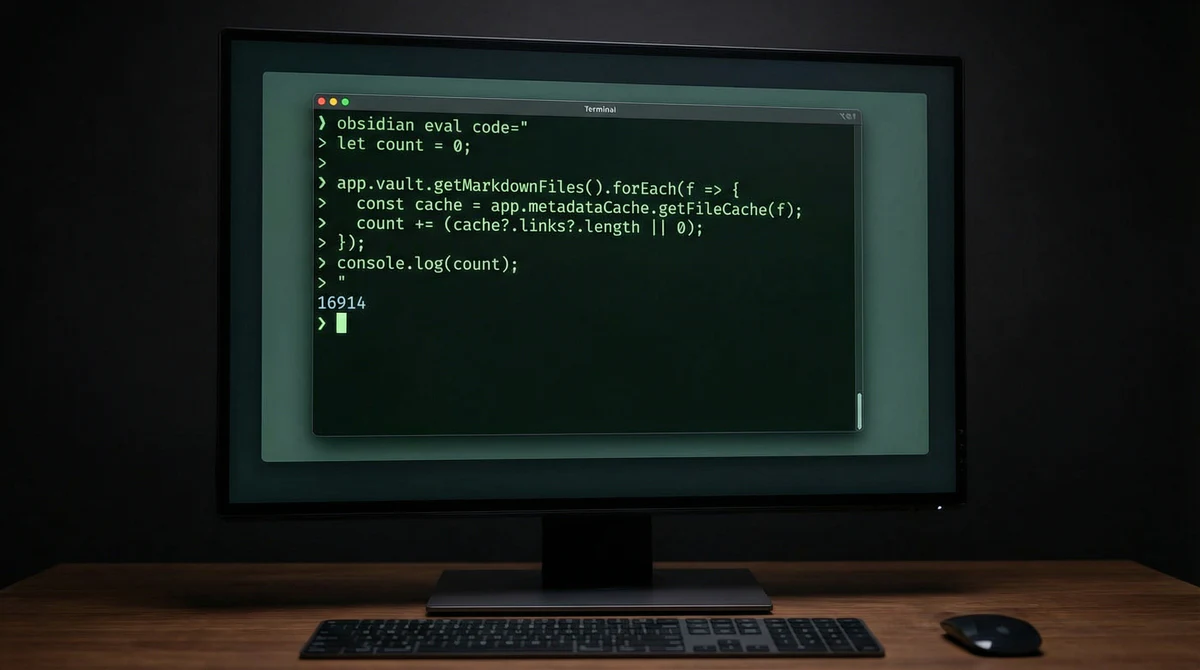

For task state, that means a database. Not a memory file. Not a context variable. A queryable database with an API, where the agent has to read current status from the source of truth before it can report on it. This is what killed the stale task problem — the daily report now runs a mandatory reconciliation step that pulls from the dashboard API before generating any narrative. You cannot write "these tasks are done" without first checking if they are.

For operational state, that means persistent JSON files. ops-state.json tracks what is currently open and recently resolved. Before any heartbeat can flag something as a new issue, it has to check whether that issue is already in recentlyResolved. The file is the gatekeeper.

For corrections, it means writing them immediately. Not at end of session. Not in a summary pass. The moment something is corrected, it goes into a corrections log that loads on next session start. The amnesia loop — where Chief acknowledged a correction, then made the same mistake twenty minutes later — exists because corrections only lived in session context. Context gets compacted. The log doesn't.

For content, it means separating observation from generation. Pull facts from verified sources first. Then generate. An agent doing both simultaneously will invent the facts it needs.

The Layer Model

After about a month of this, a pattern showed up across all the fixes. Every failure traced back to asking one type of storage to do the job of another.

The architecture we landed on has five layers, and each one has exactly one job:

The database is authoritative for task state and operational status. It is never inferred from memory. If the database doesn't say it, the agent can't assert it.

Persistent state files handle current operational items, corrections, and check tracking. They survive sessions and context compaction. They're not for narrative — they're for decisions the agent needs to make right now.

Curated long-term memory — MEMORY.md — holds product knowledge, workflows, and core truths. It's maintained deliberately, not auto-generated. It loads on session start.

Daily logs exist for context and learning. They're research material, not authoritative records. Nothing should be asserted from daily logs that isn't verified by another layer.

Session context is what it is: the current conversation. Useful for immediate work. Not reliable for anything that happened more than an hour ago.

When something goes wrong now, the diagnosis is usually the same: some layer tried to act as another layer's source of truth. Fix the routing, fix the failure.

Where We Are Now

Chief still makes mistakes. But the failure mode changed.

Before, failures were silent and confident — stated as facts, caught only after something went wrong downstream. Now, most failures get caught by the architecture before they reach me. The state file contradicts the stale narrative. The reconciliation step catches the old data. The corrections log blocks the repeat.

The part that isn't fixed is harder to fix with architecture alone: Chief still doesn't reliably know what it doesn't know. When it has a gap, it fills the gap. It doesn't ask. It doesn't hedge. It generates something plausible and presents it as information.

That might be a model problem, not an architecture problem. We're still working on it.

But here's what I know after a month: you will not solve this problem by writing better instructions. Instructions live inside a context window that compacts, resets, and forgets. You solve it by building the structure outside the model — databases, state files, verification steps, mandatory reconciliation — so the model doesn't have to remember what it was told. It can just look it up.

The direction we're moving is further than that. The files and layers are a step, not the destination. What I actually want is every interaction tracked in the database — every task dispatched, every status change, every correction made, every check run. Not summarized in a memory file. Not reconstructed from session context. Recorded at the moment it happens, queryable at any point.

When that exists, the agent doesn't have to remember anything. It queries what actually happened. Confident reporting becomes possible because the source of truth is external, persistent, and complete. Right now we're approximating that with layers. The goal is to stop approximating.

The goal isn't an agent that remembers everything. The goal is an agent that never has to.

I wrote this post inside BlackOps, my content operating system for thinking, drafting, and refining ideas — with AI assistance.

If you want the behind-the-scenes updates and weekly insights, subscribe to the newsletter.